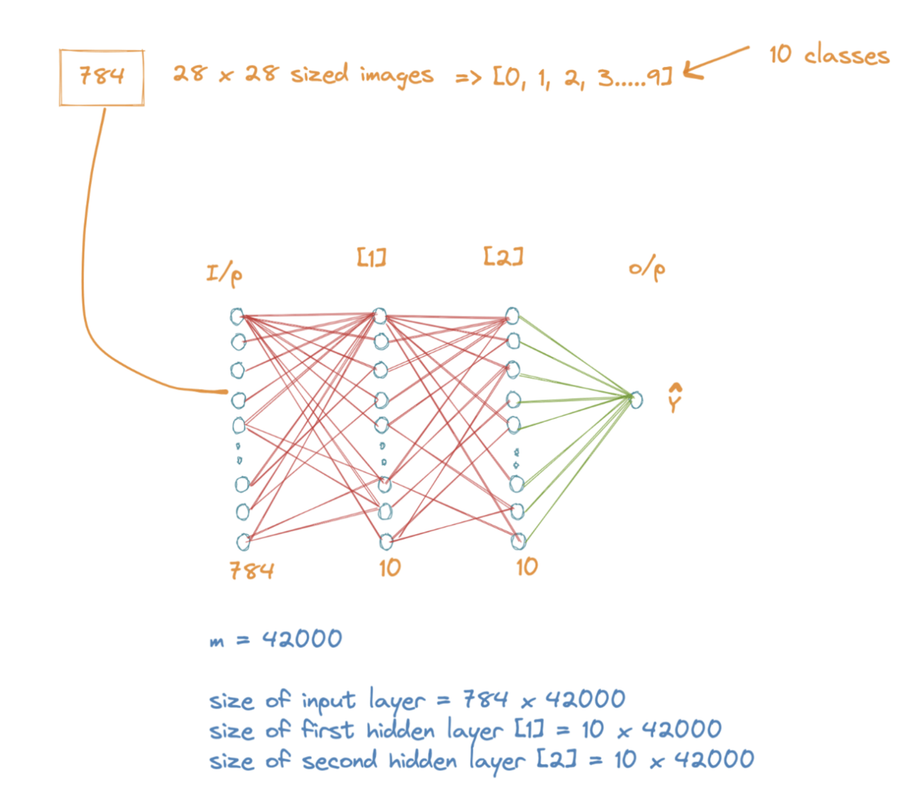

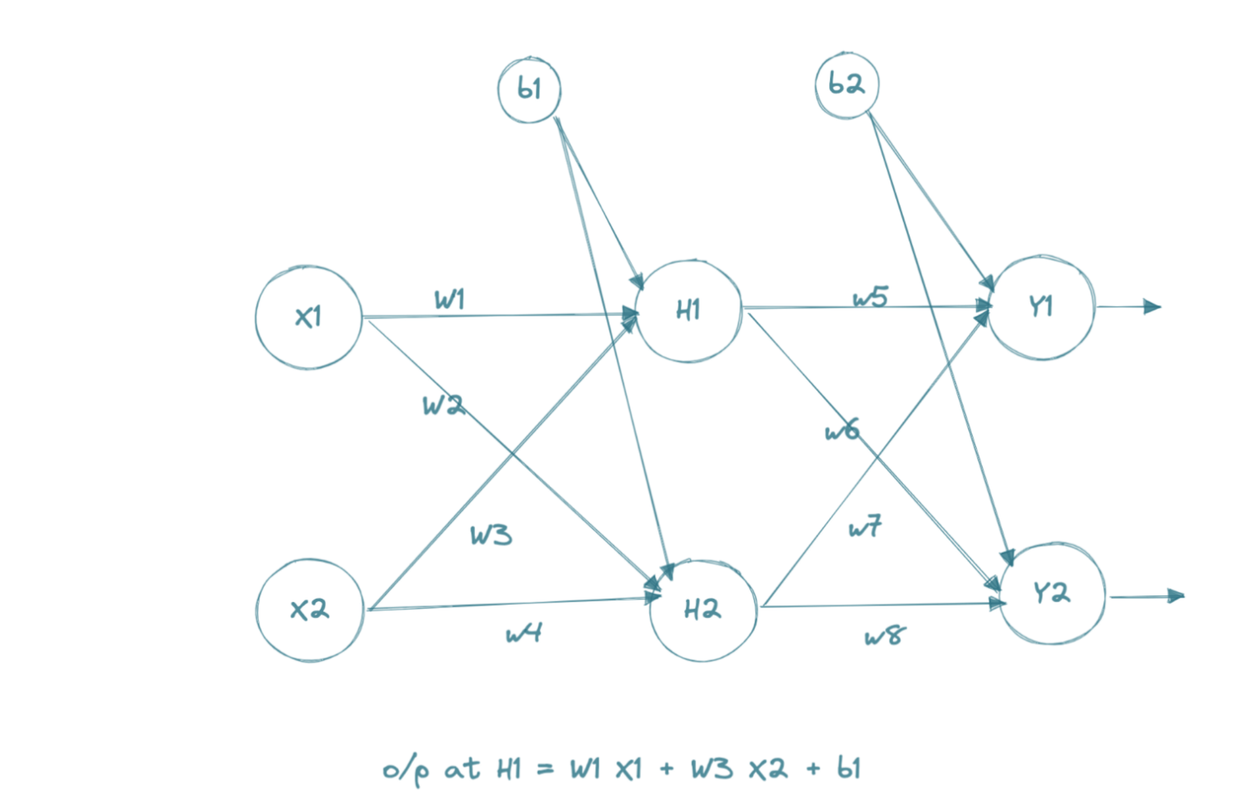

Problem statement - Classify the hand written digits with help of a basic neural net designed from scratch

Data - MNIST data present at https://www.kaggle.com/datasets/animatronbot/mnist-digit-recognizer

Methodology Write the forward and backpropagation functions from scratch using simple maths and calculus

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

data = pd.read_csv('/kaggle/input/mnist-digit-recognizer/train.csv')

data.head(10)

data.shape

Transpose the data, so that one column is one image. The shape would be 785*42000 after transpose

data = np.array(data)

m, n = data.shape

np.random.shuffle(data) # shuffle before splitting into test and training sets

data_test = data[0:2000].T

Y_test = data_test[0]

X_test = data_test[1:n]

X_test = X_test / 255.

data_train = data[2000:m].T

Y_train = data_train[0]

X_train = data_train[1:n]

X_train = X_train / 255.

_,m_train = X_train.shape

X_train.shape

Y_train

def init_params():

W1 = np.random.rand(10, 784) - 0.5

b1 = np.random.rand(10, 1) - 0.5

W2 = np.random.rand(10, 10) - 0.5

b2 = np.random.rand(10, 1) - 0.5

return W1, b1, W2, b2

def ReLU(Z):

return np.maximum(Z, 0)

def softmax(Z):

A = np.exp(Z) / sum(np.exp(Z))

return A

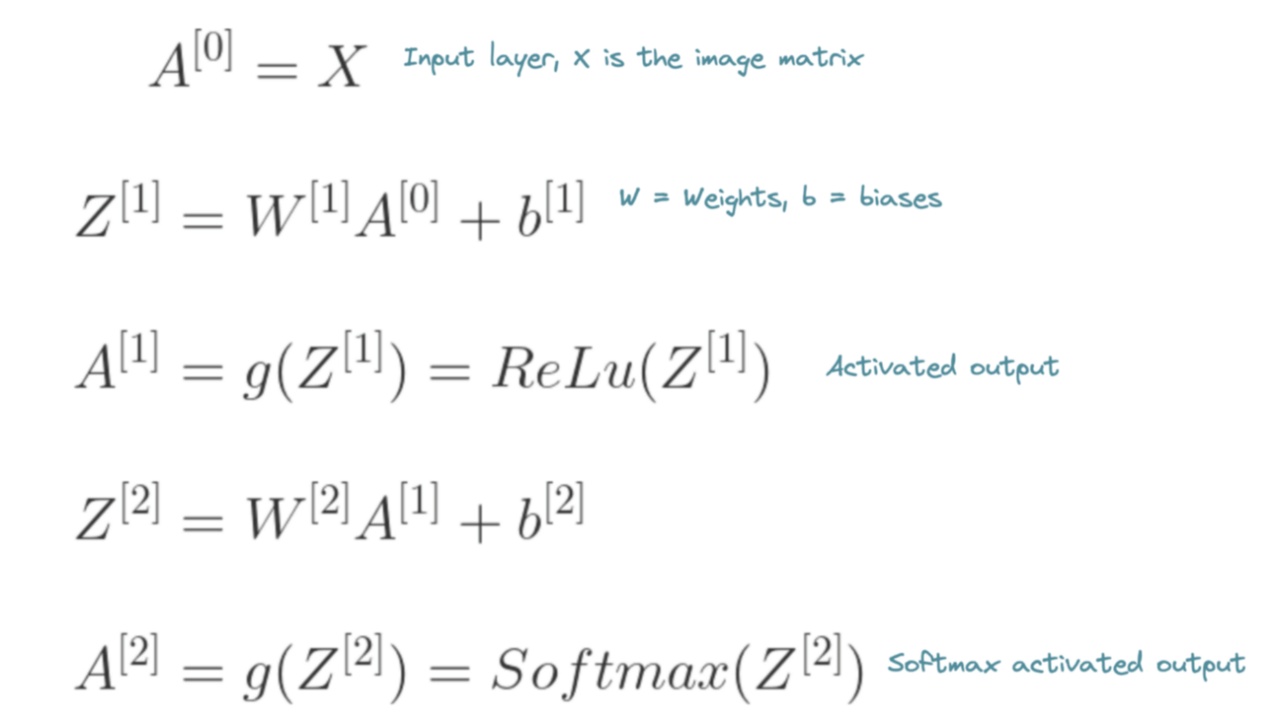

def forward_prop(W1, b1, W2, b2, X):

Z1 = W1.dot(X) + b1

A1 = ReLU(Z1)

Z2 = W2.dot(A1) + b2

A2 = softmax(Z2)

return Z1, A1, Z2, A2

def ReLU_deriv(Z):

return Z > 0

def one_hot(Y):

one_hot_Y = np.zeros((Y.size, Y.max() + 1))

one_hot_Y[np.arange(Y.size), Y] = 1

one_hot_Y = one_hot_Y.T

return one_hot_Y

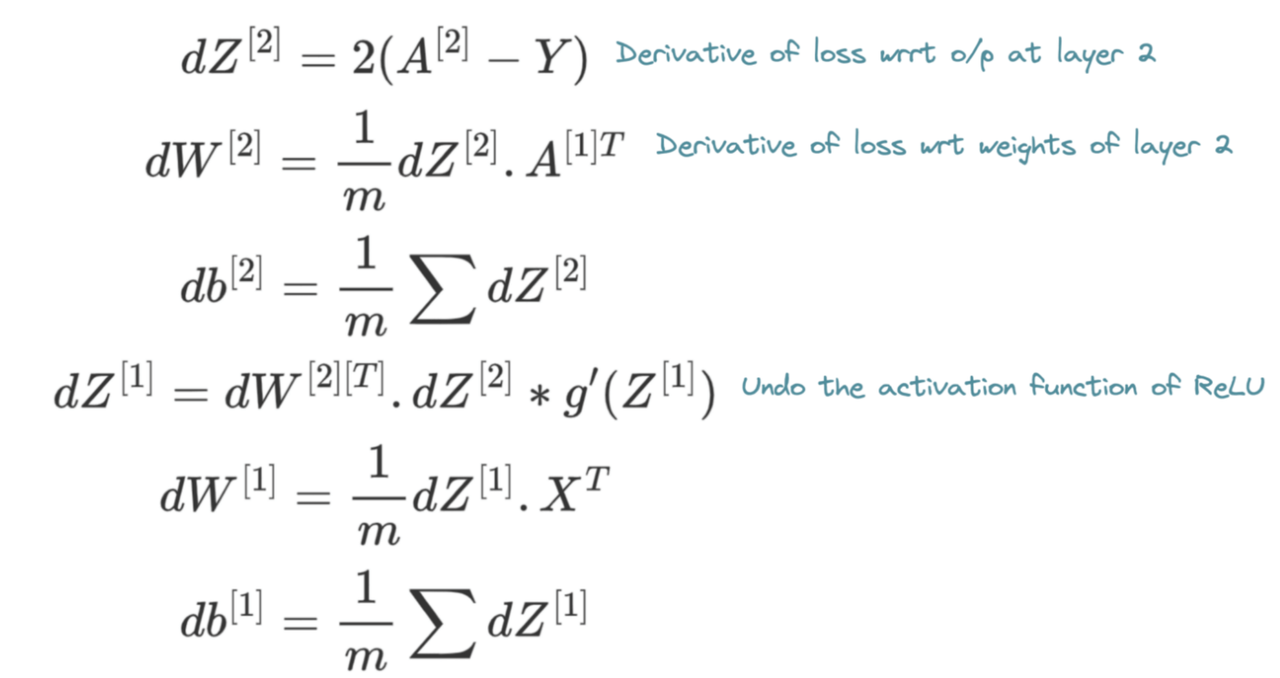

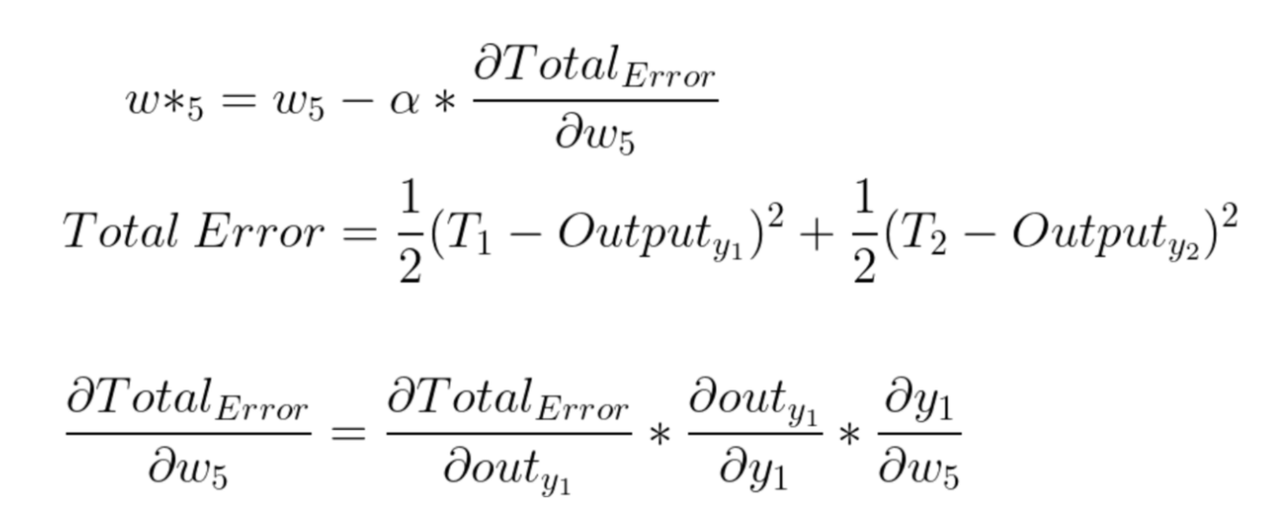

def backward_prop(Z1, A1, Z2, A2, W1, W2, X, Y):

one_hot_Y = one_hot(Y)

dZ2 = 2*(A2 - one_hot_Y)

dW2 = 1 / m * dZ2.dot(A1.T)

db2 = 1 / m * np.sum(dZ2)

dZ1 = W2.T.dot(dZ2) * ReLU_deriv(Z1)

dW1 = 1 / m * dZ1.dot(X.T)

db1 = 1 / m * np.sum(dZ1)

return dW1, db1, dW2, db2

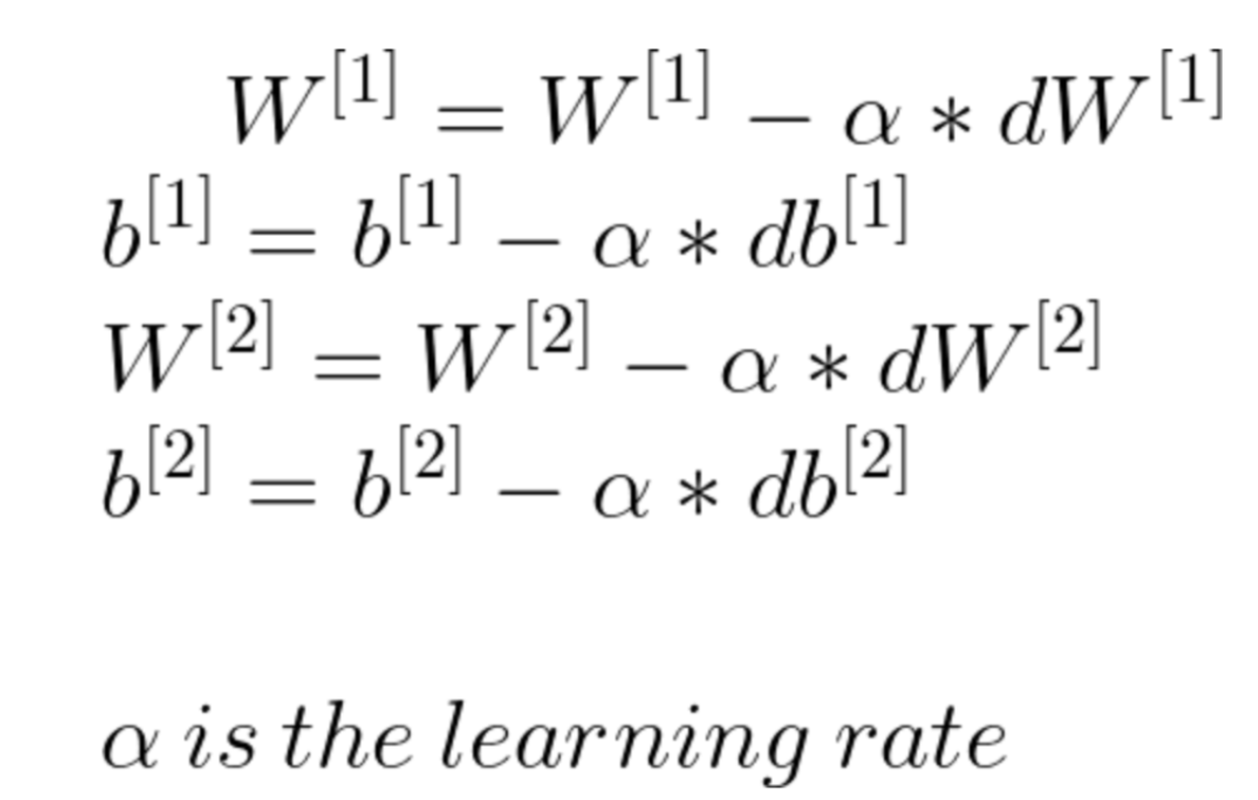

def update_params(W1, b1, W2, b2, dW1, db1, dW2, db2, alpha):

# print(W1.shape)

# print(dW1.shape)

W1 = W1 - alpha * dW1

b1 = b1 - alpha * db1

W2 = W2 - alpha * dW2

b2 = b2 - alpha * db2

return W1, b1, W2, b2

def get_predictions(A2):

return np.argmax(A2, 0)

def get_accuracy(predictions, Y):

print(predictions, Y)

return np.sum(predictions == Y) / Y.size

def gradient_descent(X, Y, alpha, iterations):

W1, b1, W2, b2 = init_params()

for i in range(iterations):

Z1, A1, Z2, A2 = forward_prop(W1, b1, W2, b2, X)

dW1, db1, dW2, db2 = backward_prop(Z1, A1, Z2, A2, W1, W2, X, Y)

#print(W1.shape)

#print(dW1.shape)

W1, b1, W2, b2 = update_params(W1, b1, W2, b2, dW1, db1, dW2, db2, alpha)

if i % 10 == 0:

print("Iteration: ", i)

predictions = get_predictions(A2)

print(get_accuracy(predictions, Y))

return W1, b1, W2, b2

W1, b1, W2, b2 = gradient_descent(X_train, Y_train, 0.10, 500)

def make_predictions(X, W1, b1, W2, b2):

_, _, _, A2 = forward_prop(W1, b1, W2, b2, X)

predictions = get_predictions(A2)

return predictions

def test_prediction(index, W1, b1, W2, b2):

current_image = X_train[:, index, None]

prediction = make_predictions(X_train[:, index, None], W1, b1, W2, b2)

label = Y_train[index]

print("Prediction: ", prediction)

print("Label: ", label)

current_image = current_image.reshape((28, 28)) * 255

plt.gray()

plt.imshow(current_image, interpolation='nearest')

plt.show()

test_prediction(0, W1, b1, W2, b2)

test_prediction(1, W1, b1, W2, b2)

test_prediction(2, W1, b1, W2, b2)

test_prediction(100, W1, b1, W2, b2)

test_prediction(200, W1, b1, W2, b2)

test_predictions = make_predictions(X_test, W1, b1, W2, b2)

get_accuracy(test_predictions, Y_test)